Learning Barometer Constructs

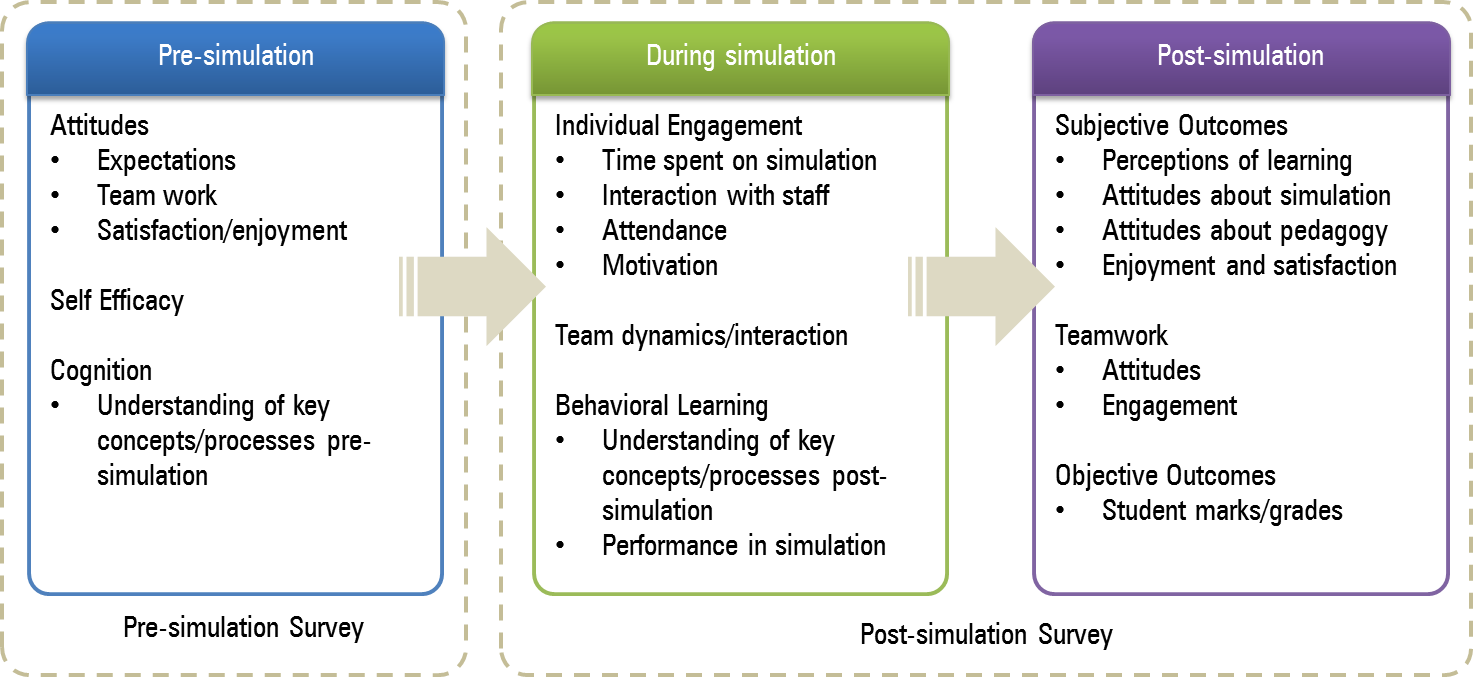

The barometer consists of pre-simulation and post-simulation surveys, which are used to measure changes in student perceptions of learning outcomes. The barometer also includes problem-based questions designed to measure cognitive change for a number of commonly used simulations. This approach is encouraged and supported by other researchers (Cronan & Douglas, 2012; Foster, 2011; Hsu, 1989; Seethamraju, 2011). The barometer is built on the premise that the impact of simulations can be measured by monitoring different variables that before, during and after the simulation (see figure on next page).

Figure 1. Key constructs measured by the Simulation Learning Barometer

Pre-simulation

Antecedents to learning include attitudes about learning expectations, teamwork and enjoyment as well as self-efficacy (Ineson, Jung, Hains, & Kim, 2013). The barometer measures a number of these items using a set of self-reported rating scales developed from the literature, student focus groups and trial surveys.

The learning outcomes of simulations have been tested with cognitive measures, such as student grades, and with affective measures, such as student perceptions of learning and student satisfaction. The complexities of measuring learning outcomes have been acknowledged and attempts to measure the learning outcomes of simulations have resulted in mixed results (Anderson & Lawton, 2009; De Freitas & Jarvis, 2007; Keys & Wolfe, 1989). Gosen and Washbush (2004) reviewed and categorized the problems of simulation research into three areas: correlates of performance, validity of simulations, and the nature of the instruments used to measure the effectiveness of simulations. Initial research in the field assumed that simulation performance could be used as a proxy for learning but this has proved to be inaccurate (Batista & Cornachione, 2005). A meta-analysis of research on simulations found that of the 248 studies evaluated, only 32 could be included in the meta-analysis owing to methodological and reporting flaws (Vogel et al., 2006). The absence of a theoretical framework and lack of rigor in design have been identified as problems with previous efforts to measure the impact of simulations (Ruben, 1999; Wu et al., 2012). Finally, much of the research is conceptual rather than empirical (Feinstein & Parks, 2002), as is evident by the lack of empirical testing and measurement.

In evaluating simulations, very few studies have reported both subjective and objective measures (Anderson & Lawton, 2009; Cronan, Léger, Robert, Babin, & Charland, 2012). These are sometimes described as indirect or direct measures (Lo, 2010).

In the Simulation Learning Barometer objective measures will be captured through a problem-based scenario while behavioural learning will be measured through students’ performance in the simulation. The problem-based scenario provides a baseline for the post-simulation survey but it will be necessary to adapt the scenario for different simulators. Subjective measures will include self-reported expectations and perceptions of knowledge and skills acquired during the simulation (Batista & Cornachione, 2005). These skills are measured before the simulation by asking students what they expect to learn and this is then compared with perceptions of what they learned in the post-simulations survey.

Table 1. Pre-simulation survey items

| Constructs | Measurement |

|---|---|

| Expected learning outcomes |

Section 1: Learning from simulations Q2 (items 1 to 9) |

| Bloom’s Taxonomy |

Section 1: Learning from simulations Q2 (items 10 to 14) |

| Expected enjoyment |

Section 1: Learning from simulations Q3 (items 1 to 6) |

| Collaboration |

Section 2: Teamwork Q4 (items 1 to 7) |

| Self-efficacy |

Section 3: Individual engagement Q5 (items 1 to 9) |

| Cognition |

Section 4: Problem solving case study Q6 to Q12 |

| Demographics |

Section 5: About You Q13 to Q21 |

During the Simulation

Although these items relate to inputs and processes during the simulation they are measured at the end of the simulation using the post-simulation survey. Chaparro-Peláez et al. (2013) found three factors that affect students’ perceived learning: satisfaction, time dedication, and collaborative learning. Online business simulations generally require students to work in teams to plan, coordinate and manage a virtual business. Students learn by developing knowledge and understanding from their experiences and interactions with others through a process of social constructivism (Boulos, Maramba, & Wheeler, 2006; Jonassen, Peck, & Wilson, 1999). Simulations provide fertile opportunities for constructivist learning because they provide multiple representations of reality, attempt to represent the natural complexity of the real world and attempt to replicate authentic tasks (Lainema & Makkonen, 2003). The learning barometer includes items designed to measure engagement and collaboration through teamwork.

The problem-based case study used in the pre-simulation survey is presented again on the post-simulation survey to measure whether students have developed a better understanding of key concepts/processes.

Post-simulation

The barometer draws on Bloom’s taxonomy to evaluate the learning outcomes of simulations. Hsu (1989) argues that the outcomes of simulations should be measured across all three of Bloom’s domains of cognitive, affective, and psychomotor learning. Previous simulation research found that learning exists when a personally responsible participant cognitively, affectively and behaviourally processes knowledge, skills and/or attitudes in a learning situation (Agnello, Pikas, Agnello, & Pikas, 2011). Cognitive learning can be described as developing an understanding of basic facts. Affective learning is where the simulation participants perceive that they learn, hold positive attitudes and satisfaction. Whilst behavioural learning might be described as simulation participants taking the facts and formulating correct decisions or actions (Agnello et al., 2011). Behavioural learning should demonstrate problem analysis and decision-making and the application of cross functional skills (Hermens & Clarke, 2009). The barometer requires students to reflect on what they have learned by responding to a series of scale items representing cognitive, affective and behavioural outcomes and skills at different levels of Bloom’s taxonomy.

Table 2. Post-simulation Survey items

| Constructs | Measurement |

|---|---|

| Perceived learning outcomes |

Section 1: Learning from simulations Q2 (items 1 to 9) Business knowledge & skills Q2 (items 10 to 14) Bloom’s Taxonomy |

| Simulation attitudes |

Section 1: Learning from simulations Q3 (items 1 to 5) Attitude Q3 (items 6 to 8) Career readiness Q3 (items 9 to 12) Satisfaction |

| Pedagogy |

Section 2: Learning Activities Q4 (items) learning activities Q4 (items 2, 3, 4,) assessment tasks Q4 (item 5) user interface Q4 (items 6 to 11) resources Q4 (items 12 to 14) course satisfaction |

| Collaboration |

Section 3: Teamwork Q5 (items 1, 3, 5, 10, 12, 14) collaborative learning Q5 (items 9, 11, 13), Q23 group dedication Q5 (items 2, 4, 6, 7, 8) socially shared metacognition Q5 (items 15, 16, 17, 18) individual outcomes Q7-Q8 online engagement |

| Collaboration attitudes |

Section 3: Teamwork Q6 (items 1 to 8) |

| Self-efficacy |

Section 4: Individual engagement Q10 (items 1 to 9) |

| Engagement |

Section 4: Individual Engagement Q9 (items 105) Section 6: About You Q18, 19, 20, 21, 22 |

| Cognition and performance |

Section 5: Problem solving case study Q11 to Q17 Directly observed performance in simulation |

Subjective measures include student’s perceptions of learning from the simulation, attitudes toward the simulation, collaboration and self-efficacy.

- Evaluation of the simulation includes perceived cognitive outcomes, which is an indirect measure of cognitive outcomes. Students perceived cognitive outcomes are their perceptions of learning which include a range of skills. These include for example, development of skills in finance, marketing, and HR. More advanced skills were also included in line with Blooms Taxonomy, such as problem solving and critical thinking.

- Students attitudes toward the simulation include affective attitudinal statements of enjoyment and satisfaction. It also includes perceptions of the simulation assisting their future career prospects and communication skills. Positive attitudes and satisfaction have been found to improve student learning.

- Students experience surrounding attitudes toward group work, perceptions of collaborative and social cognitive learning are included.

- Individual engagement is captured through motivation, level of self-directed learning, and self-efficacy.

As a major objective of the project surrounds pedagogy, the project also measures students attitudes regarding resources, learning activities and assessment tasks. Student performance and grades are an important part of the barometer but are not captured using the survey because educators would already have this information. Students are asked for their ID number so that survey responses can be matched to these grades.

Useful References

Agnello, V., Pikas, B., Agnello, A. J., & Pikas, A. (2011). Today's learner, preferences in teaching techniques. American Journal of Business Education, 4(2), 1-9.

Anderson, P. H., & Lawton, L. (2009). Business simulations and cognitive learning: Developments, desires, and future directions. Simulation & Gaming, 40(2), 193-216.

Arbaugh, J. B., & Hornik, S. (2006). Do Chickering and Gamson's seven principles also apply to online MBAs? The Journal of Educators Online, 3(2), 1-18.

Bandura, A. (2006). Guide for constructing self-efficacy scales. In F. Pajares & T. Urban (Eds.), Self-efficacy beliefs of adolescents. Greenwich, Conn.: Information Age Publisher.

Batista, I. V. C., & Cornachione, E. B. (2005). Learning styles influences on satisfaction and perceived learning: Analysis of an online business game. Developments in Business Simulation & Experiential Learning, 32(22-30).

Boulos, M. N. K., Maramba, I., & Wheeler, S. (2006). Wikis, blogs and podcasts: A new generation of Webbased tools for virtual collaborative clinical practice and education. BMC Medical Education, 6(41).

Brownell, J., & Jameson, D. A. (2004). Problem-based learning in graduate management education: An integrative model and interdisciplinary application. Journal of Management Education, 28(5), 558-577.

Chaparro-Peláez, J., Iglesias-Pradas, S., Pascual-Miguel, F. J., & Hernández-Garcia, Á. (2013). Factors affecting perceived learning of engineering students in problem based learning supported by business simulation. Interactive Learning Experiences, 21(3), 244-262.

Coffey, B. S., & Anderson, S. A. (2006). The students' view of a business simulation: Perceived value of the learning experience. Journal of Strategic Management Education, 3, 151-168.

Cronan, T. P., & Douglas, D. E. (2012). A student ERP simulation game: A longitudinal study. The Journal of Computer Information Systems, 53(1), 3-13.

Cronan, T. P., Léger, P.-M., Robert, J., Babin, G., & Charland, P. (2012). Comparing objective measures and perceptions of cognitive learning in an ERP simulation game: a game research note. Simulation & Gaming, 43(4), 461-480.

Crowe, A., Dirks, C., & Wenderoth, M. P. (2008). Biology in Bloom: Implementing Bloom's taxonomy to enhance student learning in biology. CBE-Life Sciences Edcation, 7(Winter), 368-381.

Feinstein, A. H., & Parks, S. J. (2002). The use of simulation in hospitality as an analytic tool and instructional system: A review of the literature. Journal of Hospitality & Tourism Research, 26(4), 396-421.

Ferreira, R. R. (1997). Measuring student improvement in a hospitality computer simulation. Journal of Hospitality & Tourism Education, 9(3), 58-61.

Foster, A. N. (2011). The process of learning in a simulation strategy game: Disciplinary knowledge construction. Journal of Educational Computing Research, 45(1), 1-27.

Gijbels, D., Dochy, F., Van den Bossche, P., & Segers, M. (2005). Effects of Problem-Based Learning: A Meta-Analysis From the Angle of Assessment. Review of Educational Research, 75(1), 27-61.

Gosen, J., & Washbush, J. (2004). A review of scholarship on assessing experiential learning effectiveness. Simulation & Gaming, 35(2), 270-293.

Hermens, A., & Clarke, E. (2009). Integrating blended teaching and learning to enhance graduate attributes. Education + Training, 51(5/6), 476-490.

Hsu, E. (1989). Role-event gaming simulation in management education. Simulation & Games, 20(4), 409-438.

Huang, H.-M., Rauch, U., & Liaw, S.-S. (2010). Investigating learners’ attitudes toward virtual reality learning environments:

Based on a constructivist approach. Computers & Education, 55, 1171-1182.

Hurme, T.-R. (2010). Metacognition in group problem solving - A quest for socially share metacognition. University of Oulu, Finland.

Ineson, E. M., Jung, T., Hains, C., & Kim, M. (2013). The influence of prior subject knowledge, prior ability and work experience on self-efficacy. Journal of Hospitality, Leisure, Sport and Tourism Education, 12, 59-69.

Jonassen, D. H., Peck, K. L., & Wilson, B. G. (1999). Learning with technology: A constructivist perspective. Columbus, OH: Prentice Hall.

Kendall, K. W., & Harrington, R. J. (2003). Strategic management education incorporating written or simulation cases: An empirical test. Journal of Hospitality & Tourism Research, 27(2), 143-165.

Lizzio, A., Wilson, K., & Simons, R. (2002). University students' perceptions of the learning environment and academic outcomes: implications for theory and practice. Studies in Higher Education, 27(1), 27-52.

Lo, C. C. (2010). How student satisfaction factors affect perceived learning. Journal of Scholarship of Teaching and Learning, 10(1), 47-54.

Martin, D., & McEvoy, B. (2003). Business simulations: a balanced approach to tourism education. International Journal of Contemporary Hospitality Management, 15(6), 336-339.

Miller, J. S. (2004). Problem-based learning in organizational behavior class: Solving students’ real problems. Journal of Management Education, 28(5), 578-590.

Ocker, R., J., & Yaverbaum, G. J. (2001). Collaborative learning environments: Exploring student attitudes and satisfaction in face-to-face and asynchronous computer conferencing settings. Journal of Interactive Learning Research, 12(4), 427-448.

Ruben, B. D. (1999). Simulations, games and experience-based learning: The quest for a new paradigm for teaching and learning. Simulation & Gaming, 30(4), 498-505.

Seethamraju, R. (2011). Enhancing student learning of enterprise integration and business process orientation through an ERP business simulation game. Journal of Information Systems Education, 22(1), 19-29.

Spence, L. (2001). Problem based learning: Lead to learn, learn to lead Problem based learning handbook (pp. 1-12). University Park: Penn State University, College of Information Sciences and Technology.

Teo, T., & Wong, S. L. (2013). Modeling key drivers of e-learning satisfaction among student teachers. J. Educational Computing Research, 48(1), 71-95.

Vogel, J. J., Vogel, D. S., Cannon-Bowers, J., Bowers, C. A., Muse, K., & Wright, M. (2006). Computer gaming and interactive simulations for learning: A meta-analysis. Journal of Educational Computing Research, 34(3), 229-243.

Vos, L., & Brennan, R. (2010). Marketing simulation games: student and lecturer perspectives. Marketing Intelligence & Planning, 28(7), 882-897.

Wu, W.-H., Chiou, W.-B., Kao, H.-Y., Hu, C.-H., & Huang, S.-H. (2012). Re-exploring game-assisted learning research: The perspective of learning theoretical bases. Computers & Education, 59, 1153–1161.